I. 개요

II. 설치 파일

- CentOS

- Hadoop - 3.1.0

- Zookeeper - 3.4.10

- jdk - 1.8.0_191

III. VM 생성

- 1. VM 새로 만들기

- A) VM 이름 및 운영 체제 설정

- B) 메모리 크기 설정

- C) 하드 디스크 설정

- 2. VM 설정 편집

- A) 일반 설정 변경

- B) 시스템 설정 변경

- C) 네트워크 설정 변경

- 3. VM 시작

IV. 리눅스 설치

- 1. 시작

- 2. 언어 설정

- 3. 설치 요약

- 4. 소프트웨어 선택

- 5. 설치 대상

- 6. KDUMP

- 7. 네트워크 및 호스트명

- 8. 보안 정책

- 9. 사용자 설정

- 10. 재부팅

- 11. 초기 설정

V. 사전 준비

- 1. VirtualBox Guest Addition 설치

- 2. Hostname 변경하기

- 3. hosts 파일 변경하기

- 4. 고정 IP 할당하기

- 5. 재부팅

- 6. 통신 테스트

- 7. SELINUX 설정

- 8. 방화벽 설정

- 9. SSH 설정

VI. JAVA 설치

- 1. Java 설치

- 2. Java 환경 변수 설정

VII. ZOOKEEPER 설치

- Zookeeper 계정 생성

- Zookeeper SSH 통신 설정

- Zookeeper 설치

- Zookeeper 환경 설정

- 환경 설정 된 Zookeeper 디렉토리 재압축

- 재압축 폴더 rmnode1, datanode1에 배포하기

- myid 지정

- Zookeeper 서버 실행

VIII. HADOOP 설치

- Hadoop 계정 생성

- Hadooop 계정 권한 설정

- Hadoop SSH 통신 설정

- Hadoop 설치

- Hadoop 파일 설정

- Hadoop 재압축 및 배포

- Hadoop 환경 변수 설정

IX. Zookeeper 및 Hadoop 실행

I. 개요

하둡 구성 스펙

게스트OS들 - centOS7

Hadoop - 3.1.0

Zookeeper - 3.4.10

jdk - 1.8.0_191

서버 4대를 활용한 하둡 HA 구성:

namenode1: 액티브 네임노드, 저널노드 역할

rmnode1: 스탠바이 네임노드, 리소스 매니저, 저널노드 역할, 데이터 노드 역할

datanode1: 저널노드 역할, 데이터 노드 역할

datanode2: 데이터 노드 역할

| Server | namenode1 | rmnode1 | datanode1 | datanode2 |

| OS | centos7 | centos7 | centos7 | centos7 |

| Disk Size | 30G | 30G | 30G | 30G |

| Memory Size | 4G | 2G | 2G | 2G |

| IP | 192.168.56.200 | 192.168.56.201 | 192.168.56.202 | 192.168.56.203 |

| Host name | namenode1 | rmnode1 | datanode1 | datanode2 |

총 4개의 노드를 이용하여 HA(고가용성)구성을 한 하둡 클러스터를 구성 해보겠습니다.

III. 설치 파일

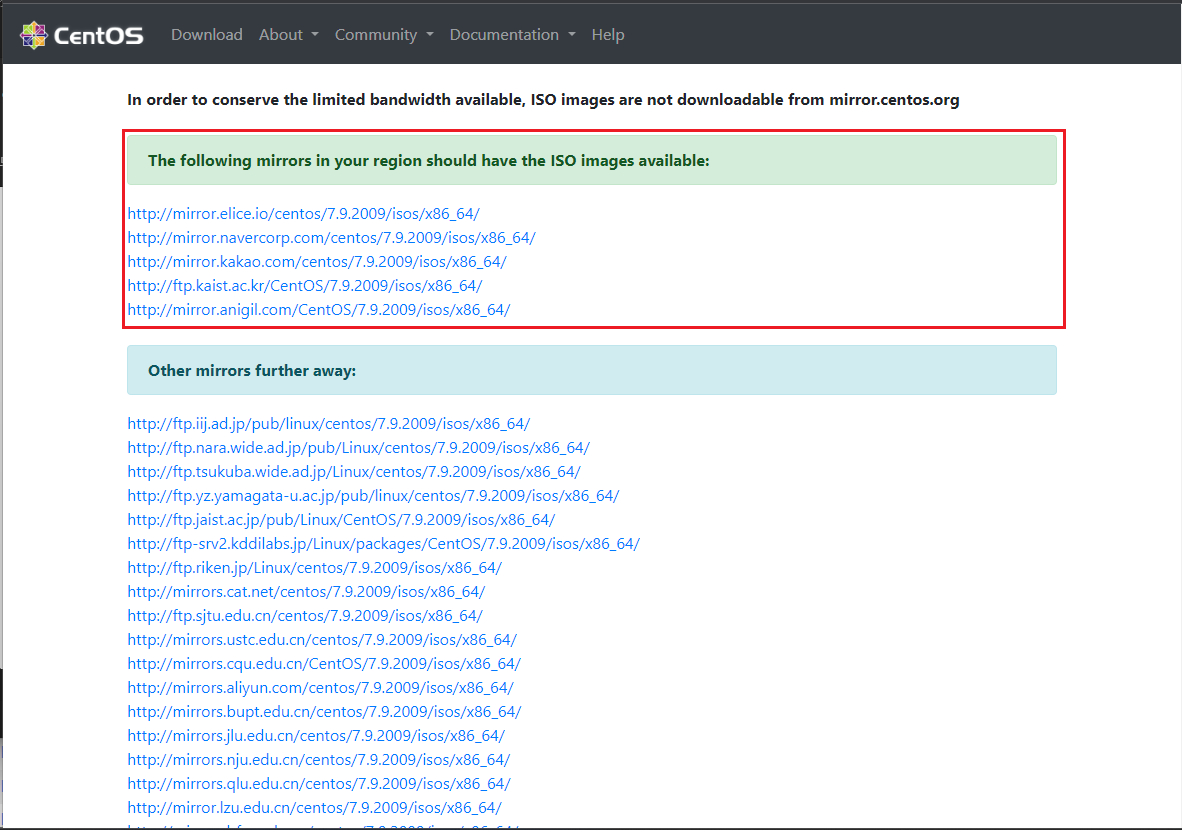

1. CentOS

• CentOS 7 iso 이미지를 다운 받기 위해 아래 RUL로 접속합니다..

https://www.centos.org/download/

Download

Home Download Architectures Packages Others x86_64 RPMs Cloud | Containers | Vagrant ARM64 (aarch64) RPMs Cloud | Containers | Vagrant IBM Power BE (ppc64) RPMs Cloud | Containers | Vagrant IBM Power (ppc64le) RPMs Cloud | Containers | Vagrant ARM32 (armhf

www.centos.org

• URL로 접속하면 아래의 화면이 나오는데 x86_64를 클릭합니다.

• 붉은 박스 안에 있는 URL을 클릭하여 미러링 사이트로 이동합니다.

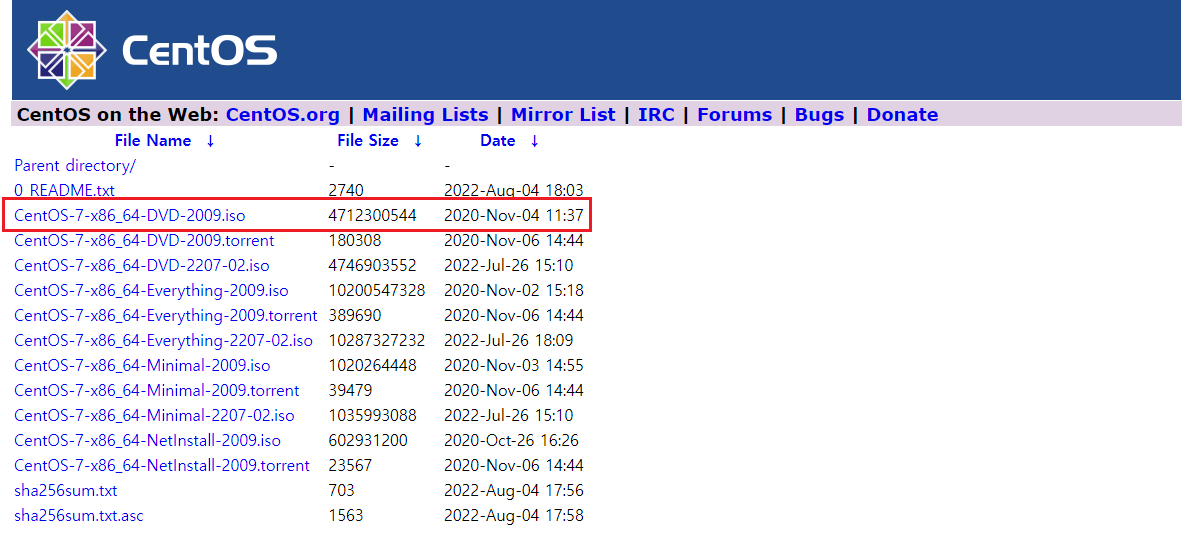

• 미러링 사이트로 이동했으면 " CentOS-7-x86_64-DVD-2009.iso " 파일을 다운로드 받습니다.

• URL로 접속하면 아래의 화면이 나오는데 x86_64를 클릭합니다.

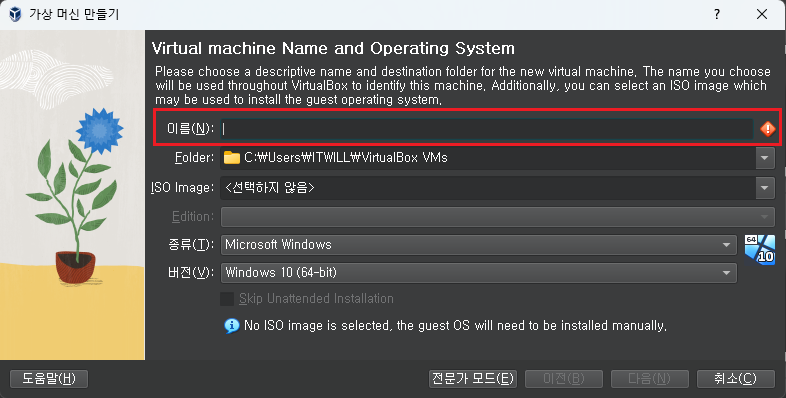

III. VM 생성

1. VM 새로 만들기

※ 아래의 과정을 반복하여 namenode1, rmnode1, datanode1, datanode2 까지 4개의 VM을 만듭니다.

• VirtualBox에서 Virtual Machine(이하 VM)을 만들기 위해 <새로 만들기>를 클릭합니다.

A) VM 이름 및 운영 체제 설정

• 제일 먼저 생성할 VM의 이름을 입력합니다. 사용할 목적이나 설치될 프로그램에 맞게 정한 후 '이름' 항목에 입력합니다.

• VM을 저장할 경로를 변경하기 위해 '머신 폴더'에서 '기타'를 선택합니다.

• 윈도우 탐색창이 열리면 VM을 저장할 적당한 드라이브와 디렉토리를 찾아 선택하면 됩니다.

• 선택된 경로에 이름으로 입력한 디렉토리(폴더)가 생성되고 그 안에 VM과 관련된 여러가지 파일들이 저장됩니다.

• 참고로 VM은 가상 환경을 구성하는데 많은 공간이 사용되므로, 넉넉한 여유 공간이 있는 경로를 지정하여 선택해야 합니다.

• 설치할 운영 체제의 종류를 선택합니다.

• 일반적으로 테스트를 위해 Linux가 많이 사용되게 되며, 그 외에도 VirtualBox가 지원하는 운영 체제를 선택할 수 있습니다.

• 설치할 운영 체제의 버전을 선택합니다.

• Linux에도 다양한 계열의 제품이 존재하며, 동일 제품 내에서도 32bit와 64bit를 지정할 수 있습니다.

• 기본적인 VM의 이름과 저장경로, 운영 체제에 대한 선택이 완료되었으면 <다음>을 클릭합니다.

B) 메모리 크기 설정

• VM에서 사용할 메모리와 Processors를 입력하고 <다음>을 클릭합니다.

• 기본적인 VM의 이름과 저장경로, 운영 체제에 대한 선택이 완료되었으면 <다음>을 클릭합니다.

C) 하드 디스크 설정

• VM을 새로 구축하기 위해서 '지금 새 가상 하드 디스크 만들기'를 선택하고 <만들기>를 클릭합니다.

• 기존에 설치된 VM파일(vdi, vhd, vmdk 등)이 있을 경우에는 '기존 가상 하드 디스크 파일 사용'을 선택하여 파일이

저장된 경로에 찾아가 등록할 수 있습니다.

• 가상 디스크 파일의 할당 방식은 '동적 할당'을 기본으로 해서 디스크 파일을 생성합니다.

• 사전에 저장 영역을 미리 할당해야 경우 " Pre-allocate Full Size " 를 체크하여 생성하면 됩니다.

• 기본적인 VM의 이름과 저장경로, 운영 체제에 대한 정보를 확인하고 문제가 없으면 <Finish>를 클릭합니다.

2. VM 설정 편집

• VM이 정상적으로 생성되었습니다.

• 추가적인 설정을 변경하기 위해 <설정>을 클릭합니다.

A) 일반 설정 변경

• 왼쪽에 항목 중에서 '일반'으로 이동한 후, '고급'탭으로 이동 합니다.

• "클립보드 공유"와 "드래그 앤 드롭"에서 "양뱡향"을 선택합니다.

A) 시스템 설정 변경

• 왼쪽에 항목 중에서 '시스템'으로 이동한 후, 부팅 순서에 있는 '플로피'의 선택을 해제 합니다.

• 현재는 플로피 디스크를 사용한 시스템 부팅이 거의 사용되지 않으므로 해제해도 되는 옵션입니다.

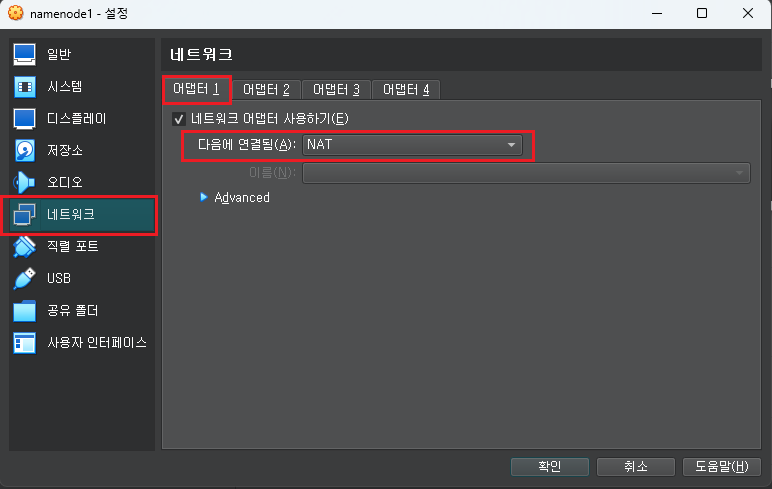

B) 네트워크 설정 변경

• '네트워크' 항목으로 이동하여 '어댑터 1'의 '다음에 연결됨'에 'NAT'를 선택합니다.

• '어댑터 2'로 이동하여 네트워크 어댑터 사용하기를 체크합니다

• '다음에 연결됨'에 '호스트 전용 어댑터'를 선택하고 이름이 'VirtualBox Host-Only Ethernet Adapter'로 되어 있는지 확인합니다.

VirtualBox : 호스트 네트워크 만들기

• VirtualBox를 실행한 후 "파일" > "도구" >"Network Manager" 를 클릭합니다.

• 호스트 네트워크 관리자 창이 열리면 <만들기>를 클릭합니다.

• 윈도우 호스트에서 디바이스 변경 허용에 대한 사용자 계정 컨트롤 창이 열리면 "예"를 클릭합니다.

• 정상적으로 호스트 전용 네트워크가 등록된 것을 확인할 수 있습니다.

• 호스트 전용 네트워크의 IP는 자동으로 할당됩니다.

• 호스트 전용 네트워크의 IP 사용 범위를 수동으로 변경하려면 하단에 있는 "수동으로 어댑터 설정"을 체크합니다.

• IPv4의 주소를 원하는 IP 대역의 첫번째 IP로 변경하고, IPv4 스브넷 마스크를 " 255.255.255.0 "으로 변경합니다

• "적용" 버튼을 클릭합니다.

• 호스트 네트워크 설정을 변경하는 것이므로 윈도우 호스트에서 디바이스 변경 허용에 대한 사용자 계정 컨트롤 창이 열리면

"예"를 클릭합니다.

• 정상적으로 IP와 서브넷 마스크가 변경된 것을 확인할 수 있습니다.

• " Advanced " 를 클릭하여 아래 항목이 보이도록 해줍니다

• "무작위 모드"에 "모두 허용"을 선택합니다

• 설정이 완료되었다면 <확인>을 클릭합니다.

3. VM 시작

• 생성된 VM을 최초로 기동하기 위해 <시작>을 클릭합니다.

• 별도의 VM창이 뜨며 시동 디스크(설치할 운영 체제 디스크)를 뭍는 창이 열립니다.

• 운영 체제(이하 OS)를 설치하기 위해 우측의 삼각형을 클릭하고 기타를 선택합니다.

• 윈도우 탐색창이 열리고 centos 의 iso가 있는 폴더로 이동해 iso파일을 선택한 후 열기를 클릭합니다

• 설치할 OS 디스크가 선택되었으면 <Mount and Retry Boot>를 클릭하여 본격적인 VM 설치를 진행합니다.

IV. 리눅스 설치

1. 시작

• iso 파일에 대한 테스트 없이 진행하면 " Install CentOs7 "을 키보드로 선택하고 엔터를 입력합니다

• iso 파일에 대한 테스트 후 진행하려면 " Test this media & install CentOS7 "을 선택하고 엔터를 입력한다

2. 언어 설정

• 설치 과정에서의 편의를 위해 설치 언어는 '한국어'를 선택하고 <계속 진행>을 클릭합니다.

• 운영 환경에서는 이슈 발생시의 문제 해결이나 디버깅 등을 위해 영어로 설치하는 것을 권합니다.

3. 설치 요약

• 설치 요약 화면으로 이 화면에서 각 항목별로 필요한 옵션을 수정한 후에 설치를 진행할 예정입니다.

4. 소프트웨어 선택

• '소프트웨어 선택' 항목을 클릭하여 편집합니다.

• 화면의 좌측에 있는 기본 개발 환경으로는 GNOME 데스크탑을 선택합니다.

• 화면의 우측에 있는 선택 환경 기능은 아래의 4가지를 선택합니다.

- GNOME 응용 프로그램

- 레거시 X Window 시스템 환경

- 개발용 툴

- 시스템 관리도

• 선택을 다 했다면 <완료>를 클릭합니다.

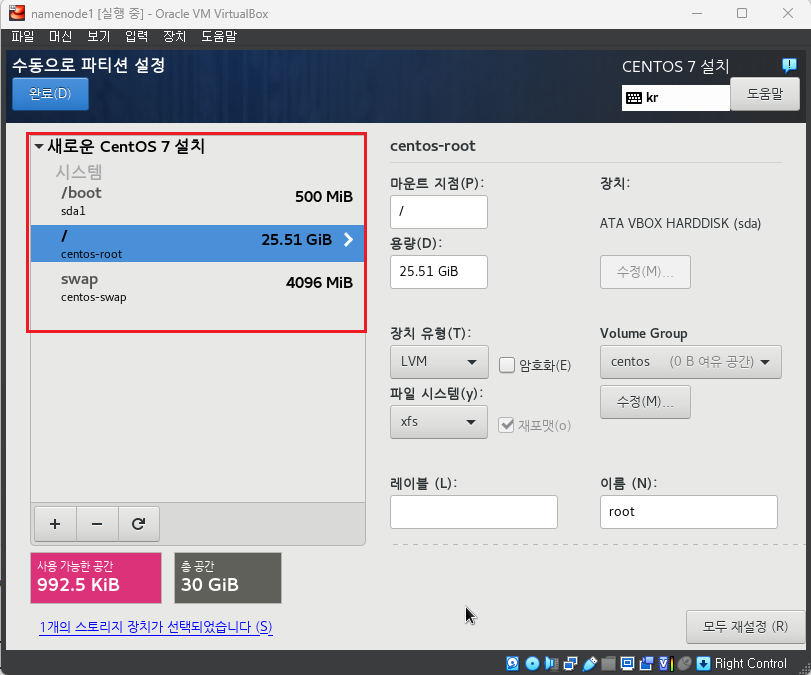

5. 설치 대상

• '설치 대상' 항목을 클릭하여 편집합니다.

• 설치할 디스크를 선택하고 파티션 설정을 자동으로 하려면 하단에 있는 " 파티션을 자동으로 설정합니다"를

선택하고 <완료> 버튼을 클릭합니다.

만약 파티션을 수동으로 설정할 경우 아래와 같이 진행하면 됩니다.

디스크 파티션 수동 설정

• 설치할 디스크를 선택하고 하단에 있는 " 파티션을 설정합니다"를 선택하고 <완료> 버튼을 클릭합니다.

• 수동으로 파티션 설정 화면으로 전환됩니다.

• 좌측 하단에 '+' 버튼을 클릭하여 마운트 지점을 생성합니다.

• 각각의 마운트 지점을 아래와 같은 크기로 할당합니다.

• swap의 경우 물리 메모리와 동일한 크기로 최대 16GB 이내로 할당합니다.

| 영역 | 크기 |

| /boot | 500MB |

| swap | 물리메모리와 동일한 크기 |

| / | 나머지 공간 할당 |

• 좌측에 적용된 내용을 확인한 후에 좌측 상단의 <완료> 버튼을 클릭합니다.

• 변경 요약 화면이 나오면 <변경 사항 적용> 버튼을 클릭합니다

6. KDUMP

• 'KDUMP' 항목을 클릭하여 편집합니다.

• 실습을 위한 구축이므로 'kdump 활성화'를 해제합니다.

• 운영 환경에서는 문제 해결 등의 이유로 활성화된 상태로 사용할 수 있으며, 운영 정책에 따라 지정해야 합니다.

• 필요한 옵션을 선택하였으면 <완료>를 클릭합니다.

7. 네트워크 및 호스트명

• ' 네트워크 및 호스트명 ' 항목을 클릭하여 편집합니다.

• 좌측에서 이더넷 (enp0s3 )을 선택하고 우측 이더넷 버튼을 클릭하여 켬으로 바꿔줍니다

.• 설정이 완료되었다면 <완료> 를 클릭합니다.

8. 설치 시작

• 설치를 위한 사전 준비가 모두 완료되었으므로 <설치 시작>을 클릭합니다.

9. 사용자 설정

• 설치가 진행되는 중간에 root 계정의 암호와 추가 사용자를 생성할 수 있습니다.

• 먼저 'ROOT 암호'를 선택합니다.

• 적당한 암호를 입력하고 <완료> 버튼을 클릭합니다.

• 취약한 암호를 입력한 경우에는 <완료> 버튼을 2번 클릭해야 합니다.

• 사용자를 생성을 클릭합니다

• '사용자 생성'에서는 root와는 별개의 유저를 생성니다.

• 이 단계를 건너 뛰어도 설치 진행이나 원격 접속에는 영향이 없습니다.

• 추가할 계정의 정보를 입력하고 <완료> 버튼을 클릭합니다.

• 취약한 암호를 입력한 경우에는 <완료> 버튼을 2번 클릭해야 합니다.

• 설치가 완료되면 <재부팅> 버튼을 클릭합니다.

10. 재부팅

• 자동으로 서버가 재부팅 됩니다.

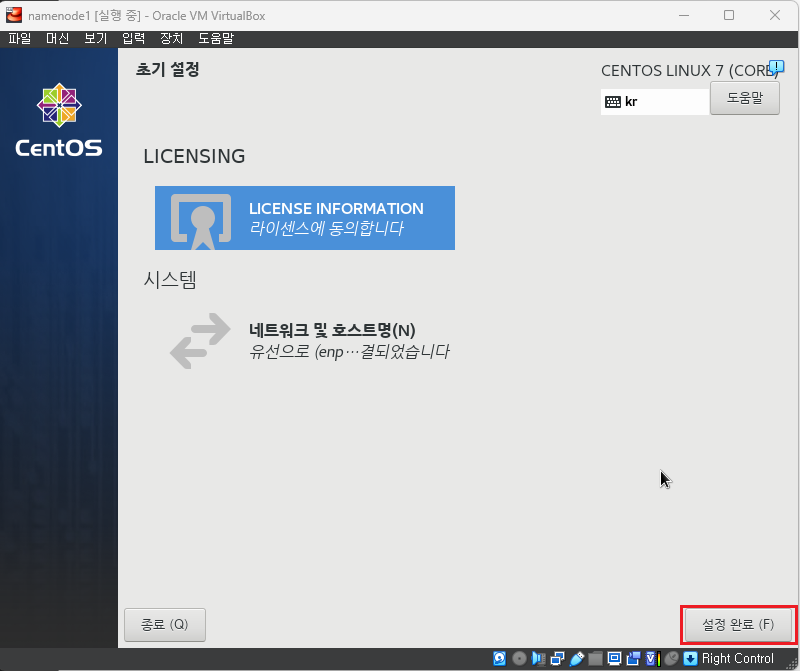

11. 초기 설정

• 재부팅 화면에서 'LICENSE INFORMATION'을 클릭하여 라이센스 동의를 진행합니다.

• '약관에 동의합니다'를 체크하고 <완료>를 클릭합니다.

• 모든 구성이 완료되었으므로 본격적인 사용을 위해 <설정 완료>를 클릭합니다.

V. 사전 준비

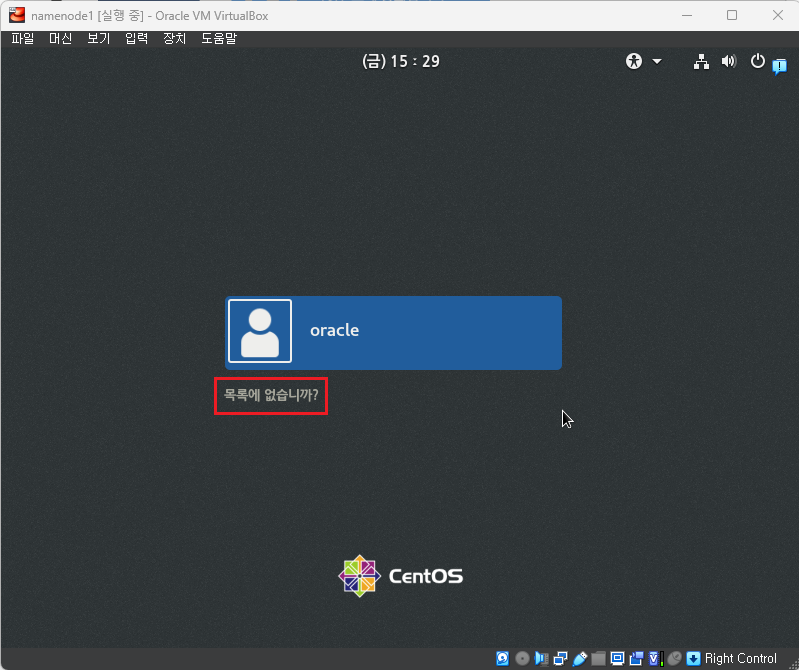

1. VirtualBox Guest Addition 설치

※ 4개의 VM 모두 진행합니다

• VirtualBox를 사용할 경우에는 실습의 편의를 위해 VBOXADDITIONS (이하 Guest Addition)를 설치하는 것이 좋습니다.

• 계정 목록 하단에 '목록에 없습니까?' 부분을 클릭합니다.

• 사용자 이름에 "root"를 입력하고 <다음>을 클릭합니다.

• 해당 유저의 암호를 입력하고 <로그인>을 클릭합니다.

• 사용자 환경 설정을 위한 화면이 나옵니다.

• 최초 설치시에 선택한 언어인 '한국어'가 기본으로 선택되어 있으면 <다음>을 클릭합니다.

• 운영 환경에서는 문제 해결이나 이슈 분석을 좀더 편하게 하기 위해 'English'를 사용하는 것을 권고합니다.

• 입력할 키보드 배치 또한 기본인 '한국어'로 되어 있습니다

• 마찬가지로 초기에 선택한 언어에 따라 다른 언어의 키보드로 나올 수 있습니다.

• <다음>을 클릭합니다.

• 개인 정보와 관련한 위치 정보 서비스 기능은 '끔'을 선택하고 <다음>을 클릭합니다.

• 온라인 계정 연결 사용하지 않을 것이므로 <건너뛰기>를 클릭합니다.

• 모든 환경 설정이 완료되었으면 <CentOS Linux 시작> 버튼을 클릭합니다.

• GNOME 환경과 관련한 안내 화면이 나옵니다.

• 오른쪽 상단에 'x'를 클릭하여 화면을 닫습니다.

• 호스트 머신의 VirtualBox 메뉴에서 '장치' > '게스트 확장 CD 이미지 삽입'을 클릭하여, Guest Addition CD를 mount 시켜줍니다.

• 설치 미디어가 인식되면 자동 시작 프로그램에 대한 안내 창이 뜹니다.

• 설치를 진행하기 위해 <실행>을 클릭합니다.

• 자동 설치 스크립트가 실행되며, VM에 Guest Addition이 설치됩니다.

• 설치가 완료되면 "엔터"를 입력하여 실행 창을 닫아줍니다.

• 바탕 화면에 우클릭 하여 터미널 창을 열어줍니다.

• 터미널 창에서 reboot 명령어를 사용해서 VM을 재부팅 합니다

[root@localhost ~]# reboot

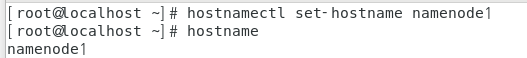

2. Hostname 변경하기

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

• 각각의 노드에 맞는 hostname를 설정합니다.

[root@localhost ~]# hostnamectl set-hostname namenode1

[root@localhost ~]# hostnamectl set-hostname rmnode1

[root@localhost ~]# hostnamectl set-hostname datanode1

[root@localhost ~]# hostnamectl set-hostname datanode2[root@localhost ~]# hostnamectl set-hostname namenode1

[root@localhost ~]# hostname

namenode1

[root@localhost ~]# hostnamectl set-hostname rmnode1

[root@localhost ~]# hostname

rmnode1

[root@localhost ~]# hostnamectl set-hostname datanode1

[root@localhost ~]# hostname

datanode1

[root@localhost ~]# hostnamectl set-hostname datanode2

[root@localhost ~]# hostname

datanode2

3. Hosts 파일 변경하기

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

• 각각의 노드에 hosts 파일을 변경 합니다

• 기존에 있던 내용은 " # " 으로 주석 처리하고 아래 내용을 입력 합니다.

vi /etc/hosts

192.168.56.200 namenode1

192.168.56.201 rmnode1

192.168.56.202 datanode1

192.168.56.203 datanode2 [root@localhost ~]# vi /etc/hosts

#127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

#::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.56.200 namenode1

192.168.56.201 rmnode1

192.168.56.202 datanode1

192.168.56.203 datanode2

4. 고정 IP 할당하기

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

• /etc/sysconfig/network-scripts/ifcfg-enp0s8 파일을 변경 하여 고정 IP를 할당합니다.

[root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-enp0s8

BOOTPROTO=static # dhcp에서 static으로 변경

ONBOOT=yes # no 에서 yes로 변경

IPADDR=192.168.56.200 # 추가

GATEWAY=192.168.56.1 # 추가

PREFIX=24 # 추가[root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-enp0s8

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static # dhcp에서 static으로 변경

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=enp0s8

UUID=7a1b5f63-3332-40da-88a6-1637cbb7363e

DEVICE=enp0s8

ONBOOT=yes # no 에서 yes로 변경

IPADDR=192.168.56.200 # 추가

GATEWAY=192.168.56.1 # 추가

PREFIX=24 # 추가

5. 재부팅

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

• 고정 IP를 할당한 후 적용을 위해 재부팅합니다.

[root@localhost ~]# systemctl restart network

[root@localhost ~]# reboot

6. 통신 테스트

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

• IP가 제대로 적용 되었는지 확인합니다.

[root@namenode1 ~]# ifconfig[root@namenode1 ~]# ifconfig

enp0s3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255

inet6 fe80::809c:e742:7d49:6798 prefixlen 64 scopeid 0x20<link>

ether 08:00:27:7c:ca:b8 txqueuelen 1000 (Ethernet)

RX packets 112 bytes 23611 (23.0 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 139 bytes 13890 (13.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

enp0s8: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.56.200 netmask 255.255.255.0 broadcast 192.168.56.255

inet6 fe80::574:bc:7efb:6b5c prefixlen 64 scopeid 0x20<link>

ether 08:00:27:c2:8c:82 txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 33 bytes 4493 (4.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 64 bytes 5184 (5.0 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 64 bytes 5184 (5.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

ether 52:54:00:6b:47:6c txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

• IP가 제대로 적용 되어 통신이 원활한지 확인 합니다.

[root@namenode1 ~]# ping -c 2 8.8.8.8[root@namenode1 ~]# ping -c 2 8.8.8.8

PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

64 bytes from 8.8.8.8: icmp_seq=1 ttl=56 time=29.2 ms

64 bytes from 8.8.8.8: icmp_seq=2 ttl=56 time=29.6 ms

--- 8.8.8.8 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 29.212/29.406/29.600/0.194 ms

7. SELINUX 설정

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

• SELINUX의 설정을 disabled로 변경한다

[root@namenode1 ~]# vi /etc/sysconfig/selinux

SELINUX=disabled# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

#SELINUX=enforcing

SELINUX=disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

8. 방화벽 설정

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

• 방화벽을 내려 통신을 원할하게 할수있도록 설정합니다.

• 마지막 명령어로 방화벽 state를 확인했을 떄 " not running " 이면 제대로 내려간 것이다.

[root@namenode1 ~]# systemctl stop firewalld.service

[root@namenode1 ~]# systemctl disable firewalld.service

[root@namenode1 ~]# systemctl restart network

[root@namenode1 ~]# firewall-cmd --state[root@namenode1 ~]# systemctl stop firewalld.service

[root@namenode1 ~]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@namenode1 ~]# systemctl restart network

[root@namenode1 ~]# firewall-cmd --state

not running

9. SSH 설정

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

※ SSH Keygen 설정은 4개 노드 모두 동일하지만 ssh 통신설정은 노드마다 다르나 주의하세요.

• SSH Keygen 설정

[root@namenode1 ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa;\

> cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys;\

> chmod 0600 ~/.ssh/authorized_keys[root@namenode1 ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa;\

> cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys;\

> chmod 0600 ~/.ssh/authorized_keys

Generating public/private rsa key pair.

Created directory '/root/.ssh'.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:yOBU6MclBLrh5V7OYlesljLx6AU+OorL61XvVo4KJzc root@namenode1

The key's randomart image is:

+---[RSA 2048]----+

| .+o |

| .... . |

| o.+. o |

| . B.ooo |

| o =o+ S |

| o.O.+ . |

| +XEX.+ |

|o o+=Ooo . |

|=*o...o. |

+----[SHA256]-----+

[root@namenode1 ~]#

• namenode1 ssh 통신설정

[root@namenode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@rmnode1

[root@namenode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode1

[root@namenode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode2[root@namenode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@rmnode1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'rmnode1 (192.168.56.201)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@rmnode1's password: < rmnode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@rmnode1'"

and check to make sure that only the key(s) you wanted were added.

[root@namenode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode1 (192.168.56.202)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@datanode1's password: < datanode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@datanode1'"

and check to make sure that only the key(s) you wanted were added.

[root@namenode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode2 (192.168.56.203)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@datanode2's password: < datanode2의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@datanode2'"

and check to make sure that only the key(s) you wanted were added.

[root@namenode1 ~]#

• rmnode1 ssh 통신설정

[root@rmnode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@namenode1

[root@rmnode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode1

[root@rmnode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode2[root@rmnode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@namenode1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'namenode1 (192.168.56.200)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@namenode1's password: < namenode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@namenode1'"

and check to make sure that only the key(s) you wanted were added.

[root@rmnode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode1 (192.168.56.202)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@datanode1's password: < datanode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@datanode1'"

and check to make sure that only the key(s) you wanted were added.

[root@rmnode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode2 (192.168.56.203)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@datanode2's password: < datanode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@datanode2'"

and check to make sure that only the key(s) you wanted were added.

[root@rmnode1 ~]#

• datanode1 ssh 통신설정

[root@datanode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@namenode1

[root@datanode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@rmnode1

[root@datanode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode2

[root@datanode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@namenode1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'namenode1 (192.168.56.200)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@namenode1's password: < namenode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@namenode1'"

and check to make sure that only the key(s) you wanted were added.

[root@datanode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@rmnode1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'rmnode1 (192.168.56.201)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@rmnode1's password: < rmnode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@rmnode1'"

and check to make sure that only the key(s) you wanted were added.

[root@datanode1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode2

\/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode2 (192.168.56.203)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@datanode2's password: < datanode2의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@datanode2'"

and check to make sure that only the key(s) you wanted were added.

• datanode2 ssh 통신설정

[root@datanode2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@namenode1

[root@datanode2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@rmnode1

[root@datanode2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode1[root@datanode2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@namenode1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'namenode1 (192.168.56.200)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@namenode1's password: < namenode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@namenode1'"

and check to make sure that only the key(s) you wanted were added.

[root@datanode2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@rmnode1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'rmnode1 (192.168.56.201)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@rmnode1's password: < rmnode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@rmnode1'"

and check to make sure that only the key(s) you wanted were added.

[root@datanode2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode1 (192.168.56.202)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@datanode1's password: < datanode1의 root계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@datanode1'"

and check to make sure that only the key(s) you wanted were added.

[root@datanode2 ~]#

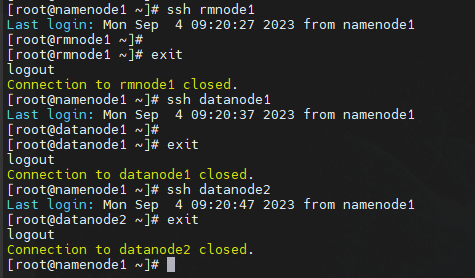

• namenode1 ssh 접속 확인

[root@namenode1 ~]# ssh rmnode1

[root@namenode1 ~]# ssh datanode1

[root@namenode1 ~]# ssh datanode2[root@namenode1 ~]# ssh rmnode1

Last login: Mon Sep 4 09:20:27 2023 from namenode1

[root@rmnode1 ~]# exit

logout

Connection to rmnode1 closed.

[root@namenode1 ~]# ssh datanode1

Last login: Mon Sep 4 09:20:37 2023 from namenode1

[root@datanode1 ~]# exit

logout

Connection to datanode1 closed.

[root@namenode1 ~]# ssh datanode2

Last login: Mon Sep 4 09:20:47 2023 from namenode1

[root@datanode2 ~]# exit

logout

Connection to datanode2 closed.

[root@namenode1 ~]#

• rmnode1 ssh 접속 확인

[root@rmnode1 ~]# ssh namenode1

[root@rmnode1 ~]# ssh datanode1

[root@rmnode1 ~]# ssh datanode2[root@rmnode1 ~]# ssh namenode1

Last login: Mon Sep 4 08:08:40 2023 from gateway

[root@namenode1 ~]# exit

logout

Connection to namenode1 closed.

[root@rmnode1 ~]# ssh datanode1

Last login: Mon Sep 4 09:21:45 2023 from namenode1

[root@datanode1 ~]# exit

logout

Connection to datanode1 closed.

[root@rmnode1 ~]# ssh datanode2

Last login: Mon Sep 4 09:21:50 2023 from namenode1

[root@datanode2 ~]# exit

logout

Connection to datanode2 closed.

[root@rmnode1 ~]#

• datanode1 ssh 접속 확인

[root@datanode1 ~]# ssh namenode1

[root@datanode1 ~]# ssh rmnode1

[root@datanode1 ~]# ssh datanode2[root@datanode1 ~]# ssh namenode1

Last login: Mon Sep 4 09:25:15 2023 from rmnode1

[root@namenode1 ~]# exit

logout

Connection to namenode1 closed.

[root@datanode1 ~]# ssh rmnode1

Last login: Mon Sep 4 09:21:32 2023 from namenode1

[root@rmnode1 ~]# exit

logout

Connection to rmnode1 closed.

[root@datanode1 ~]# ssh datanode2

Last login: Mon Sep 4 09:25:30 2023 from rmnode1

[root@datanode2 ~]# exit

logout

Connection to datanode2 closed.

[root@datanode1 ~]#

• datanode2 ssh 접속 확인

[root@datanode2 ~]# ssh namenode1

[root@datanode2 ~]# ssh rmnode1

[root@datanode2 ~]# ssh datanode1[root@datanode2 ~]# ssh namenode1

Last login: Mon Sep 4 09:26:30 2023 from datanode1

[root@namenode1 ~]# exit

logout

Connection to namenode1 closed.

[root@datanode2 ~]# ssh rmnode1

Last login: Mon Sep 4 09:26:36 2023 from datanode1

[root@rmnode1 ~]# exit

logout

Connection to rmnode1 closed.

[root@datanode2 ~]# ssh datanode1

Last login: Mon Sep 4 09:25:23 2023 from rmnode1

[root@datanode1 ~]# exit

logout

Connection to datanode1 closed.

[root@datanode2 ~]#

VI. JAVA 설치

1. Java 설치

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

[root@namenode1 ~]# yum install -y java-1.8.0-openjdk-devel.x86_64

[root@namenode1 ~]# javac -version[root@namenode1 ~]# yum install -y java-1.8.0-openjdk-devel.x86_64

Loaded plugins: fastestmirror, langpacks

Loading mirror speeds from cached hostfile

* base: mirror.kakao.com

* extras: mirror.kakao.com

* updates: mirror.kakao.com

base | 3.6 kB 00:00:00

extras | 2.9 kB 00:00:00

updates | 2.9 kB 00:00:00

Resolving Dependencies

--> Running transaction check

---> Package java-1.8.0-openjdk-devel.x86_64 1:1.8.0.382.b05-1.el7_9 will be installed

--> Processing Dependency: java-1.8.0-openjdk(x86-64) = 1:1.8.0.382.b05-1.el7_9 for package: 1:java-1.8.0-openjdk-devel-1.8.0.382.b05-1.el7_9.x86_64

--> Running transaction check

---> Package java-1.8.0-openjdk.x86_64 1:1.8.0.262.b10-1.el7 will be updated

---> Package java-1.8.0-openjdk.x86_64 1:1.8.0.382.b05-1.el7_9 will be an update

--> Processing Dependency: java-1.8.0-openjdk-headless(x86-64) = 1:1.8.0.382.b05-1.el7_9 for package: 1:java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64

--> Running transaction check

---> Package java-1.8.0-openjdk-headless.x86_64 1:1.8.0.262.b10-1.el7 will be updated

---> Package java-1.8.0-openjdk-headless.x86_64 1:1.8.0.382.b05-1.el7_9 will be an update

--> Processing Dependency: tzdata-java >= 2022g for package: 1:java-1.8.0-openjdk-headless-1.8.0.382.b05-1.el7_9.x86_64

--> Running transaction check

---> Package tzdata-java.noarch 0:2020a-1.el7 will be updated

---> Package tzdata-java.noarch 0:2023c-1.el7 will be an update

--> Finished Dependency Resolution

Dependencies Resolved

=========================================================================================================

Package Arch Version Repository Size

=========================================================================================================

Installing:

java-1.8.0-openjdk-devel x86_64 1:1.8.0.382.b05-1.el7_9 updates 9.8 M

Updating for dependencies:

java-1.8.0-openjdk x86_64 1:1.8.0.382.b05-1.el7_9 updates 318 k

java-1.8.0-openjdk-headless x86_64 1:1.8.0.382.b05-1.el7_9 updates 33 M

tzdata-java noarch 2023c-1.el7 updates 186 k

Transaction Summary

=========================================================================================================

Install 1 Package

Upgrade ( 3 Dependent packages)

Total size: 43 M

Total download size: 9.8 M

Downloading packages:

경고: /var/cache/yum/x86_64/7/updates/packages/java-1.8.0-openjdk-devel-1.8.0.382.b05-1.el7_9.x86_64.rpm: Header V4 RSA/SHA256 Signature, key ID f4a80eb5: NOKEY

Public key for java-1.8.0-openjdk-devel-1.8.0.382.b05-1.el7_9.x86_64.rpm is not installed

java-1.8.0-openjdk-devel-1.8.0.382.b05-1.el7_9.x86_64.rpm | 9.8 MB 00:00:00

Retrieving key from file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

Importing GPG key 0xF4A80EB5:

Userid : "CentOS-7 Key (CentOS 7 Official Signing Key) <security@centos.org>"

Fingerprint: 6341 ab27 53d7 8a78 a7c2 7bb1 24c6 a8a7 f4a8 0eb5

Package : centos-release-7-9.2009.0.el7.centos.x86_64 (@anaconda)

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Updating : tzdata-java-2023c-1.el7.noarch 1/7

Updating : 1:java-1.8.0-openjdk-headless-1.8.0.382.b05-1.el7_9.x86_64 2/7

warning: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/blacklisted.certs created as /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/blacklisted.certs.rpmnew

warning: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/java.policy created as /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/java.policy.rpmnew

warning: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/java.security created as /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/java.security.rpmnew

restored /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/blacklisted.certs.rpmnew to /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/blacklisted.certs

restored /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/java.policy.rpmnew to /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/java.policy

restored /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/java.security.rpmnew to /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64/jre/lib/security/java.security

Updating : 1:java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64 3/7

Installing : 1:java-1.8.0-openjdk-devel-1.8.0.382.b05-1.el7_9.x86_64 4/7

Cleanup : 1:java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64 5/7

Cleanup : 1:java-1.8.0-openjdk-headless-1.8.0.262.b10-1.el7.x86_64 6/7

Cleanup : tzdata-java-2020a-1.el7.noarch 7/7

Verifying : 1:java-1.8.0-openjdk-headless-1.8.0.382.b05-1.el7_9.x86_64 1/7

Verifying : 1:java-1.8.0-openjdk-devel-1.8.0.382.b05-1.el7_9.x86_64 2/7

Verifying : tzdata-java-2023c-1.el7.noarch 3/7

Verifying : 1:java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64 4/7

Verifying : 1:java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64 5/7

Verifying : 1:java-1.8.0-openjdk-headless-1.8.0.262.b10-1.el7.x86_64 6/7

Verifying : tzdata-java-2020a-1.el7.noarch 7/7

Installed:

java-1.8.0-openjdk-devel.x86_64 1:1.8.0.382.b05-1.el7_9

Dependency Updated:

java-1.8.0-openjdk.x86_64 1:1.8.0.382.b05-1.el7_9

java-1.8.0-openjdk-headless.x86_64 1:1.8.0.382.b05-1.el7_9

tzdata-java.noarch 0:2023c-1.el7

Complete!

[root@namenode1 ~]# javac -version

javac 1.8.0_382

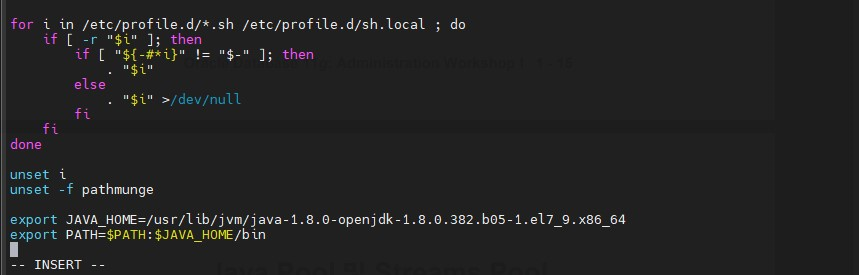

2. Java 환경 변수 설정

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

※ /etc/profile 맨 밑의 부분에 JAVA_HOME 및 PATH 경로 설정

※ openjdk 경로는 '/usr/lib/jvm/java-<VERSION>-openjdk-<JDK-VERSION>.x86_64'

[root@namenode1 ~]# vi /etc/profile

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.382.b05-1.el7_9.x86_64

export PATH=$PATH:$JAVA_HOME/bin

VII. ZOOKEEPER 설치

1. Zookeeper 계정 생성

※ 3개의 노드에서 진행합니다 ( namenode1, rmnode1, datanode1)

※ root 계정에서 진행합니다

[root@namenode1 ~]# adduser zookeeper

[root@namenode1 ~]# passwd zookeeper[root@namenode1 ~]# adduser zookeeper

[root@namenode1 ~]# passwd zookeeper

zookeeper 사용자의 비밀 번호 변경 중

새 암호:

잘못된 암호: 암호는 8 개의 문자 보다 짧습니다

새 암호 재입력:

passwd: 모든 인증 토큰이 성공적으로 업데이트 되었습니다.

2. Zookeeper SSH 통신 설정

※ 3개의 노드에서 진행합니다 ( namenode1, rmnode1, datanode1)

※ zookeeper 계정에서 진행합니다

※ SSH Keygen 설정은 3개 노드 모두 동일하지만 ssh 통신설정은 노드마다 다르나 주의하세요.

• zookeeper 유저 접속

[root@namenode1 ~]# su - zookeeper

• SSH Keygen 생성

[zookeeper@namenode1 ~]$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa;\

> cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys;\

> chmod 0600 ~/.ssh/authorized_keys[zookeeper@namenode1 ~]$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa;\

> cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys;\

> chmod 0600 ~/.ssh/authorized_keys

Generating public/private rsa key pair.

Created directory '/home/zookeeper/.ssh'.

Your identification has been saved in /home/zookeeper/.ssh/id_rsa.

Your public key has been saved in /home/zookeeper/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:eUxA1VY3+KA9sU3ZlMlmUNdnbLt0KB0gDpGR4KKPjiw zookeeper@namenode1

The key's randomart image is:

+---[RSA 2048]----+

| .o**o..+*=X|

| . o+ .o+.XX|

| . . o.o.X=o|

| . . + ..+o=.|

| . S o .o o|

| o . . |

| . . |

|Eo |

|o.. |

+----[SHA256]-----+

• namenode1 ssh 통신설정

[zookeeper@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@rmnode1

[zookeeper@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@datanode1[zookeeper@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@rmnode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'rmnode1 (192.168.56.201)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

zookeeper@rmnode1's password: < rmnode1의 zookeeper계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'zookeeper@rmnode1'"

and check to make sure that only the key(s) you wanted were added.

[zookeeper@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@datanode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode1 (192.168.56.202)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

zookeeper@datanode1's password: < datanode1의 zookeeper계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'zookeeper@datanode1'"

and check to make sure that only the key(s) you wanted were added.

• namenode1 ssh 접속 확인

[zookeeper@namenode1 ~]$ ssh rmnode1

[zookeeper@namenode1 ~]$ ssh datanode1[zookeeper@namenode1 ~]$ ssh rmnode1

Last login: Mon Sep 4 17:30:41 2023 from datanode1

[zookeeper@rmnode1 ~]$ exit

logout

Connection to rmnode1 closed.

[zookeeper@namenode1 ~]$ ssh datanode1

Last login: Mon Sep 4 17:27:30 2023 from rmnode1

[zookeeper@datanode1 ~]$ exit

logout

Connection to datanode1 closed.

[zookeeper@namenode1 ~]$

• rmnode1 ssh 통신설정

[zookeeper@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@namenode1

[zookeeper@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@datanode1[zookeeper@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@namenode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'namenode1 (192.168.56.200)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already inst alled

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the n ew keys

zookeeper@namenode1's password: < rmnode1의 zookeeper계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'zookeeper@namenode1'"

and check to make sure that only the key(s) you wanted were added.

[zookeeper@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@datanode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode1 (192.168.56.202)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already inst alled

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the n ew keys

zookeeper@datanode1's password: < datanode1의 zookeeper계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'zookeeper@datanode1'"

and check to make sure that only the key(s) you wanted were added.

• rmnode1 ssh 접속 확인

[zookeeper@rmnode1 ~]$ ssh namenode1

[zookeeper@rmnode1 ~]$ ssh datanode1[zookeeper@rmnode1 ~]$ ssh namenode1

Last login: Mon Sep 4 17:11:34 2023

[zookeeper@namenode1 ~]$ exit

logout

Connection to namenode1 closed.

[zookeeper@rmnode1 ~]$ ssh datanode1

Last login: Mon Sep 4 17:24:35 2023

[zookeeper@datanode1 ~]$ exit

logout

Connection to datanode1 closed.

[zookeeper@rmnode1 ~]$

• datanode1 ssh 통신설정

[zookeeper@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@namenode1

[zookeeper@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@rmnode1

[zookeeper@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@namenode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'namenode1 (192.168.56.200)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already inst alled

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the n ew keys

zookeeper@namenode1's password: < namenode1의 zookeeper계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'zookeeper@namenode1'"

and check to make sure that only the key(s) you wanted were added.

[zookeeper@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub zookeeper@rmnode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'rmnode1 (192.168.56.201)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already inst alled

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the n ew keys

zookeeper@rmnode1's password: < rmnode1의 zookeeper계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'zookeeper@rmnode1'"

and check to make sure that only the key(s) you wanted were added.

• datanode1 ssh 접속 확인

[zookeeper@datanode1 ~]$ ssh namenode1

[zookeeper@datanode1 ~]$ ssh rmnode1

[zookeeper@datanode1 ~]$ ssh namenode1

Last login: Mon Sep 4 17:27:24 2023 from rmnode1

[zookeeper@namenode1 ~]$ exit

logout

Connection to namenode1 closed.

[zookeeper@datanode1 ~]$ ssh rmnode1

Last login: Mon Sep 4 17:24:30 2023

[zookeeper@rmnode1 ~]$ exit

logout

Connection to rmnode1 closed.

[zookeeper@datanode1 ~]$

3. Zookeeper 설치

※ namenode1 에서 진행합니다 ( namenode1으로 설치하여 각 노드에 배포하는 방식 )

※ zookeeper 계정에서 진행합니다

• zookeeper 다운로드

[zookeeper@namenode1 ~]$ wget https://archive.apache.org/dist/zookeeper/zookeeper-3.4.10/zookeeper-3.4.10.tar.gz[zookeeper@namenode1 ~]$ wget https://archive.apache.org/dist/zookeeper/zookeeper-3.4.10/zookeeper-3.4.10.tar. gz

--2023-09-04 18:07:13-- https://archive.apache.org/dist/zookeeper/zookeeper-3.4.10/zookeeper-3.4.10.tar.gz

Resolving archive.apache.org (archive.apache.org)... 65.108.204.189, 2a01:4f9:1a:a084::2

Connecting to archive.apache.org (archive.apache.org)|65.108.204.189|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 35042811 (33M) [application/x-gzip]

Saving to: ‘zookeeper-3.4.10.tar.gz’

100%[=====================================================================================>] 35,042,811 1.26MB/s in 80s

2023-09-04 18:08:41 (427 KB/s) - ‘zookeeper-3.4.10.tar.gz’ saved [35042811/35042811]

[zookeeper@namenode1 ~]$

• zookeeper 다운로드 확인

[zookeeper@namenode1 ~]$ ll[zookeeper@namenode1 ~]$ ll

합계 34224

-rw-rw-r-- 1 zookeeper zookeeper 35042811 3월 30 2017 zookeeper-3.4.10.tar.gz

• zookeeper 압축 해제

[zookeeper@namenode1 ~]$ tar xvfz zookeeper-3.4.10.tar.gz

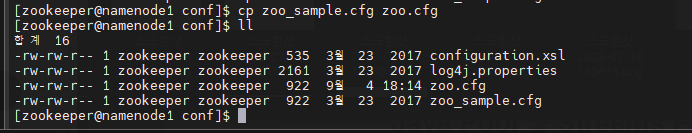

4. Zookeeper 환경 설정

※ zookeeper 계정에서 진행합니다

• zookeeper conf디렉토리로 이동

[zookeeper@namenode1 ~]$ cd zookeeper-3.4.10/conf

• zookeeper config file 복사

[zookeeper@namenode1 conf]$ cp zoo_sample.cfg zoo.cfg

• zookeeper config file 수정

※ 기존 내용은 전부 주석 ( # ) 처리하고 맨 밑에 내용을 작성합니다

[zookeeper@namenode1 conf]$ vi zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/home/zookeeper/data

clientPort=2181

maxClientCnxns=0

maxSessionTimeout=180000

server.1=namenode1:2888:3888

server.2=rmnode1:2888:3888

server.3=datanode1:2888:3888

5. 환경 설정 된 Zookeeper 디렉토리 재압축

※ zookeeper 계정에서 진행합니다

• /home/zookeeper로 이동

[zookeeper@namenode1 conf]$ cd ~

• 재압축 하기

[zookeeper@namenode1 ~]$ tar xvfz zookeeper-3.4.10.tar.gz

6. 재압축 폴더 rmnode1, datanode1에 배포하기

※ zookeeper 계정에서 진행합니다

• 각 해당하는 노드에 배포하기

※ namenode1 에서 진행

[zookeeper@namenode1 ~]$ scp zookeeper.tar.gz zookeeper@rmnode1:/home/zookeeper zookeeper.tar.gz 100% 33MB 41.8MB/s 00:00

[zookeeper@namenode1 ~]$ scp zookeeper.tar.gz zookeeper@datanode1:/home/zookeeper zookeeper.tar.gz[zookeeper@namenode1 ~]$ scp zookeeper.tar.gz zookeeper@rmnode1:/home/zookeeper zookeeper.tar.gz 100% 33MB 41.8MB/s 00:00

[zookeeper@namenode1 ~]$ scp zookeeper.tar.gz zookeeper@datanode1:/home/zookeeper

zookeeper.tar.gz 100% 33MB 34.4MB/s 00:00

[zookeeper@namenode1 ~]$

• 압축파일 해제하기

※ rmnode1, datanode1 에서만 진행

• rmnode1

[zookeeper@rmnode1 ~]$ tar xvfz zookeeper.tar.gz

• rmnode1

[zookeeper@datanode1 ~]$ tar xvfz zookeeper.tar.gz

7. myid 지정

※ 3개의 노드 에서 진행합니다 ( namenode1, rmnode1,datanode1 )

※ zookeeper 계정에서 진행합니다

• data 디렉토리 생성

[zookeeper@namenode1 ~]$ mkdir data

• data 디렉토리 이동

[zookeeper@namenode1 ~]$ cd data/

• myid 파일 생성 및 내용 입력

※ namenode1의 myid파일에는 1 입력

※ rmnode1의 myid파일에는 2 입력

※ datanode1의 myid파일에는 3 입력

[zookeeper@namenode1 data]$ touch myid

[zookeeper@namenode1 data]$ vi myid

8. Zookeeper 서버 실행

※ 3개의 노드 에서 진행합니다 ( namenode1, rmnode1,datanode1 )

※ zookeeper 계정에서 진행합니다

• 서버 실행하기

• namenode1

[zookeeper@namenode1 data]$ cd ~

[zookeeper@namenode1 ~]$ cd zookeeper-3.4.10/

[zookeeper@namenode1 zookeeper-3.4.10]$ ./bin/zkServer.sh start[zookeeper@namenode1 data]$ cd ~

[zookeeper@namenode1 ~]$ cd zookeeper-3.4.10/

[zookeeper@namenode1 zookeeper-3.4.10]$ ./bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/zookeeper/zookeeper-3.4.10/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

• rmnode1

[zookeeper@rmnode1 data]$ cd ~

[zookeeper@rmnode1 ~]$ cd zookeeper-3.4.10/

[zookeeper@rmnode1 zookeeper-3.4.10]$ ./bin/zkServer.sh start[zookeeper@rmnode1 data]$ cd ~

[zookeeper@rmnode1 ~]$ cd zookeeper-3.4.10/

[zookeeper@rmnode1 zookeeper-3.4.10]$ ./bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/zookeeper/zookeeper-3.4.10/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

• datanode1

[zookeeper@datanode1 data]$ cd ~

[zookeeper@datanode1 ~]$ cd zookeeper-3.4.10/

[zookeeper@datanode1 zookeeper-3.4.10]$ ./bin/zkServer.sh start

[zookeeper@datanode1 zookeeper-3.4.10]$ ./bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/zookeeper/zookeeper-3.4.10/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

• 서버 확인하기

※ 3개 노드( namenode1, rmnode1,datanode1 )에서 전부 zookeeper가 실행 된 후 확인 합니다

※ 3개 노드 모두 확인 합니다

• namenode1

[zookeeper@namenode1 zookeeper-3.4.10]$ ./bin/zkServer.sh status[zookeeper@namenode1 zookeeper-3.4.10]$ ./bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/zookeeper/zookeeper-3.4.10/bin/../conf/zoo.cfg

Mode: leader

• rmnode1

[zookeeper@rmnode1 zookeeper-3.4.10]$ ./bin/zkServer.sh status

[zookeeper@rmnode1 zookeeper-3.4.10]$ ./bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/zookeeper/zookeeper-3.4.10/bin/../conf/zoo.cfg

Mode: follower

• datanode1

[zookeeper@datanode1 zookeeper-3.4.10]$ ./bin/zkServer.sh status[zookeeper@datanode1 zookeeper-3.4.10]$ ./bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/zookeeper/zookeeper-3.4.10/bin/../conf/zoo.cfg

Mode: follower

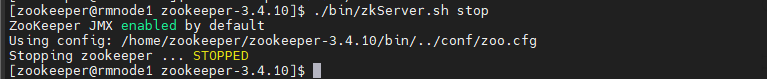

• 서버 종료하기

※ 3개 노드 모두 종료 합니다

• namenode1

[zookeeper@namenode1 zookeeper-3.4.10]$ ./bin/zkServer.sh stop[zookeeper@namenode1 zookeeper-3.4.10]$ ./bin/zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /home/zookeeper/zookeeper-3.4.10/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

• rmnode1

[zookeeper@rmnode1 zookeeper-3.4.10]$ ./bin/zkServer.sh stop[zookeeper@rmnode1 zookeeper-3.4.10]$ ./bin/zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /home/zookeeper/zookeeper-3.4.10/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

• datanode1

[zookeeper@datanode1 zookeeper-3.4.10]$ ./bin/zkServer.sh stop

[zookeeper@datanode1 zookeeper-3.4.10]$ ./bin/zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /home/zookeeper/zookeeper-3.4.10/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

VIII. HADOOP 설치

1. Hadoop 계정 생성

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

• namenode1

[root@namenode1 ~]# adduser hadoop

[root@namenode1 ~]# passwd hadoop[root@namenode1 ~]# adduser hadoop

[root@namenode1 ~]# passwd hadoop

hadoop 사용자의 비밀 번호 변경 중

새 암호:

잘못된 암호: 암호는 8 개의 문자 보다 짧습니다

새 암호 재입력:

passwd: 모든 인증 토큰이 성공적으로 업데이트 되었습니다.

• rmnode1

[root@rmnode1 ~]# adduser hadoop

[root@rmnode1 ~]# passwd hadoop[root@rmnode1 ~]# adduser hadoop

[root@rmnode1 ~]# passwd hadoop

hadoop 사용자의 비밀 번호 변경 중

새 암호:

잘못된 암호: 암호는 8 개의 문자 보다 짧습니다

새 암호 재입력:

passwd: 모든 인증 토큰이 성공적으로 업데이트 되었습니다.

• datanode1

[root@datanode1 ~]# adduser hadoop

[root@datanode1 ~]# passwd hadoop[root@datanode1 ~]# adduser hadoop

[root@datanode1 ~]# passwd hadoop

hadoop 사용자의 비밀 번호 변경 중

새 암호:

잘못된 암호: 암호는 8 개의 문자 보다 짧습니다

새 암호 재입력:

passwd: 모든 인증 토큰이 성공적으로 업데이트 되었습니다.

• datanode2

[root@datanode2 ~]# adduser hadoop

[root@datanode2 ~]# passwd hadoop[root@datanode2 ~]# adduser hadoop

[root@datanode2 ~]# passwd hadoop

hadoop 사용자의 비밀 번호 변경 중

새 암호:

잘못된 암호: 암호는 8 개의 문자 보다 짧습니다

새 암호 재입력:

passwd: 모든 인증 토큰이 성공적으로 업데이트 되었습니다.

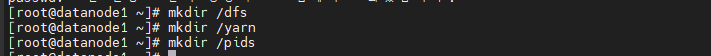

2. Hadooop 계정 권한 설정

※ 4개의 노드 모두 진행합니다

※ root 계정에서 진행합니다

• 디렉토리 생성

• namenode1

[root@namenode1 ~]# mkdir /dfs

[root@namenode1 ~]# mkdir /yarn

[root@namenode1 ~]# mkdir /pids

• rmnode1

[root@rmnode1 ~]# mkdir /dfs

[root@rmnode1 ~]# mkdir /yarn

[root@rmnode1 ~]# mkdir /pids

• datanode1

[root@datanode1 ~]# mkdir /dfs

[root@datanode1 ~]# mkdir /yarn

[root@datanode1 ~]# mkdir /pids

• datanode2

[root@datanode2 ~]# mkdir /dfs

[root@datanode2 ~]# mkdir /yarn

[root@datanode2 ~]# mkdir /pids

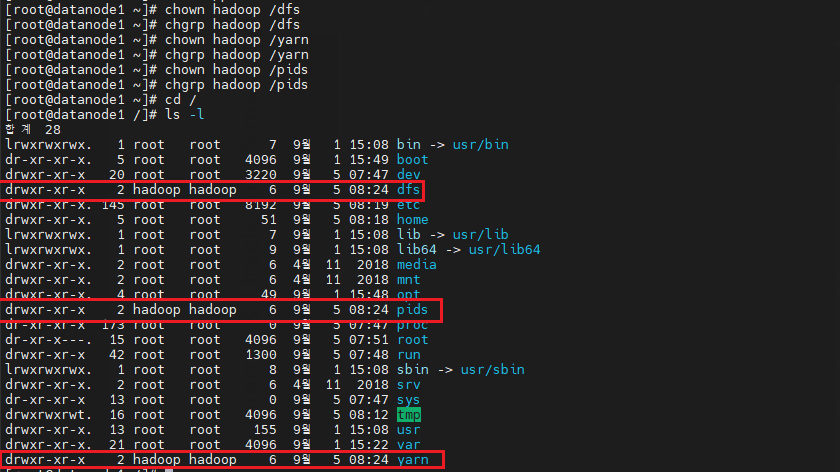

• 디렉토리 권한 변경 및 확인

• namenode1

[root@namenode1 ~]# chown hadoop /dfs

[root@namenode1 ~]# chgrp hadoop /dfs

[root@namenode1 ~]# chown hadoop /yarn

[root@namenode1 ~]# chgrp hadoop /yarn

[root@namenode1 ~]# chown hadoop /pids

[root@namenode1 ~]# chgrp hadoop /pids

[root@namenode1 ~]# cd /

[root@namenode1 /]# ls -l

• rmnode1

[root@rmnode1 ~]# chown hadoop /dfs

[root@rmnode1 ~]# chgrp hadoop /dfs

[root@rmnode1 ~]# chown hadoop /yarn

[root@rmnode1 ~]# chgrp hadoop /yarn

[root@rmnode1 ~]# chown hadoop /pids

[root@rmnode1 ~]# chgrp hadoop /pids

[root@rmnode1 ~]# cd /

[root@rmnode1 /]# ls -l

• datanode1

[root@datanode1 ~]# chown hadoop /dfs

[root@datanode1 ~]# chgrp hadoop /dfs

[root@datanode1 ~]# chown hadoop /yarn

[root@datanode1 ~]# chgrp hadoop /yarn

[root@datanode1 ~]# chown hadoop /pids

[root@datanode1 ~]# chgrp hadoop /pids

[root@datanode1 ~]# cd /

[root@datanode1 /]# ls -l

• datanode2

[root@datanode2 /]# chown hadoop /dfs

[root@datanode2 /]# chgrp hadoop /dfs

[root@datanode2 /]# chown hadoop /yarn

[root@datanode2 /]# chgrp hadoop /yarn

[root@datanode2 /]# chown hadoop /pids

[root@datanode2 /]# chgrp hadoop /pids

[root@datanode2 /]# cd /

[root@datanode2 /]# ls -l

3. Hadoop SSH 통신 설정

※ 4개의 노드 모두 진행합니다

※ hadoop 계정에서 진행합니다

※ SSH Keygen 설정은 4개 노드 모두 동일하지만 ssh 통신설정은 노드마다 다르나 주의하세요.

• hadoop 계정으로 이동

[root@namenode1 /]# su - hadoop

• SSH Keygen 설정

[hadoop@namenode1 ~]$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa;\

> cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys;\

> chmod 0600 ~/.ssh/authorized_keys[hadoop@namenode1 ~]$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa;\

> cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys;\

> chmod 0600 ~/.ssh/authorized_keys

Generating public/private rsa key pair.

Created directory '/home/hadoop/.ssh'.

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:F75s/R2zdGOi4t7/3kTezdfAdppnaybYyqy8yMinq/I hadoop@namenode1

The key's randomart image is:

+---[RSA 2048]----+

| |

| |

| . |

| . . . |

| S o + o|

| o o . O+|

| + +.o*%|

| . . o.oo+..++BX|

| oE.=+oo*+*o.B=o|

+----[SHA256]-----+

• namenode1 ssh 통신설정

[hadoop@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@rmnode1

[hadoop@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode1

[hadoop@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode2[hadoop@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@rmnode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'rmnode1 (192.168.56.201)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@rmnode1's password: < rmnode1의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@rmnode1'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode1 (192.168.56.202)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@datanode1's password: < datanode1의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@datanode1'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode2

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode2 (192.168.56.203)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@datanode2's password: < datanode2의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@datanode2'"

and check to make sure that only the key(s) you wanted were added.

• rmnode1 ssh 통신설정

[hadoop@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@namenode1

[hadoop@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode1

[hadoop@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode2[hadoop@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@namenode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'namenode1 (192.168.56.200)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@namenode1's password: < namenode1의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@namenode1'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode1 (192.168.56.202)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@datanode1's password: < datanode1의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@datanode1'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@rmnode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode2

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode2 (192.168.56.203)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@datanode2's password: < datanode2의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@datanode2'"

and check to make sure that only the key(s) you wanted were added.

• datanode1 ssh 통신설정

[hadoop@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@namenode1

[hadoop@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@rmnode1

[hadoop@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode2[hadoop@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@namenode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'namenode1 (192.168.56.200)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@namenode1's password: < namenode1의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@namenode1'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@rmnode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'rmnode1 (192.168.56.201)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@rmnode1's password: < rmnode1의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@rmnode1'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@datanode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode2

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode2 (192.168.56.203)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@datanode2's password: < datanode2의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@datanode2'"

and check to make sure that only the key(s) you wanted were added.

• datanode2 ssh 통신설정

[hadoop@datanode2 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@namenode1

[hadoop@datanode2 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@rmnode1

[hadoop@datanode2 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode1[hadoop@datanode2 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@namenode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'namenode1 (192.168.56.200)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@namenode1's password: < namenode1의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@namenode1'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@datanode2 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@rmnode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'rmnode1 (192.168.56.201)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@rmnode1's password: < rmnode1의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@rmnode1'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@datanode2 ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode1

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'datanode1 (192.168.56.202)' can't be established.

ECDSA key fingerprint is SHA256:/hKOkCaiPMJKSk3PFXMxp8YTcZ72v7m8q+rrcj5Qrlc.

ECDSA key fingerprint is MD5:26:5d:ea:cf:25:87:6d:9f:41:d4:c1:0e:ba:b9:c6:77.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@datanode1's password: < datanode1의 hadoop계정 passwd 입력 >

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@datanode1'"

and check to make sure that only the key(s) you wanted were added.

• namenode1 ssh 접속 확인

[hadoop@namenode1 ~]$ ssh rmnode1

[hadoop@namenode1 ~]$ ssh datanode1

[hadoop@namenode1 ~]$ ssh datanode2

[hadoop@namenode1 ~]$ ssh rmnode1

Last login: Tue Sep 5 08:45:08 2023

[hadoop@rmnode1 ~]$ exit

logout

Connection to rmnode1 closed.

[hadoop@namenode1 ~]$ ssh datanode1

Last login: Tue Sep 5 08:45:20 2023

[hadoop@datanode1 ~]$ exit

logout

Connection to datanode1 closed.

[hadoop@namenode1 ~]$ ssh datanode2

Last login: Tue Sep 5 08:45:27 2023

[hadoop@datanode2 ~]$ exit

logout

Connection to datanode2 closed.

[hadoop@namenode1 ~]$

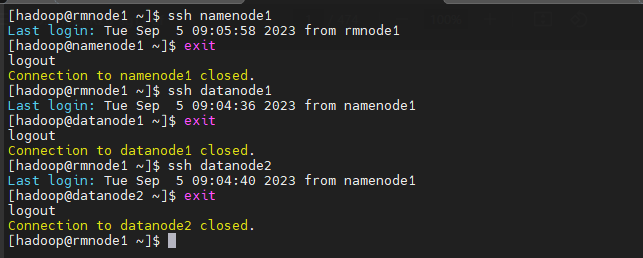

• rmnode1 ssh 접속 확인

[hadoop@rmnode1 ~]$ ssh namenode1

[hadoop@rmnode1 ~]$ ssh datanode1

[hadoop@rmnode1 ~]$ ssh datanode2[hadoop@rmnode1 ~]$ ssh namenode1

Last login: Tue Sep 5 09:05:58 2023 from rmnode1

[hadoop@namenode1 ~]$ exit

logout

Connection to namenode1 closed.

[hadoop@rmnode1 ~]$ ssh datanode1

Last login: Tue Sep 5 09:04:36 2023 from namenode1

[hadoop@datanode1 ~]$ exit

logout

Connection to datanode1 closed.

[hadoop@rmnode1 ~]$ ssh datanode2

Last login: Tue Sep 5 09:04:40 2023 from namenode1

[hadoop@datanode2 ~]$ exit

logout

Connection to datanode2 closed.

[hadoop@rmnode1 ~]$

• datanode1 ssh 접속 확인

[hadoop@datanode1 ~]$ ssh namenode1

[hadoop@datanode1 ~]$ ssh rmnode1

[hadoop@datanode1 ~]$ ssh datanode2[hadoop@datanode1 ~]$ ssh namenode1

Last login: Tue Sep 5 09:06:10 2023 from rmnode1

[hadoop@namenode1 ~]$ exit

logout

Connection to namenode1 closed.

[hadoop@datanode1 ~]$ ssh rmnode1

Last login: Tue Sep 5 09:04:31 2023 from namenode1

[hadoop@rmnode1 ~]$ exit

logout

Connection to rmnode1 closed.

[hadoop@datanode1 ~]$ ssh datanode2

Last login: Tue Sep 5 09:06:21 2023 from rmnode1

[hadoop@datanode2 ~]$ exit

logout

Connection to datanode2 closed.

[hadoop@datanode1 ~]$

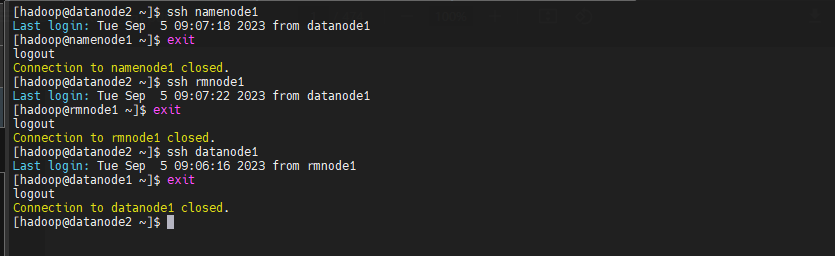

• datanode2 ssh 접속 확인

[hadoop@datanode2 ~]$ ssh namenode1

[hadoop@datanode2 ~]$ ssh rmnode1

[hadoop@datanode2 ~]$ ssh datanode1[hadoop@datanode2 ~]$ ssh namenode1

Last login: Tue Sep 5 09:07:18 2023 from datanode1

[hadoop@namenode1 ~]$ exit

logout

Connection to namenode1 closed.

[hadoop@datanode2 ~]$ ssh rmnode1

Last login: Tue Sep 5 09:07:22 2023 from datanode1

[hadoop@rmnode1 ~]$ exit

logout

Connection to rmnode1 closed.

[hadoop@datanode2 ~]$ ssh datanode1

Last login: Tue Sep 5 09:06:16 2023 from rmnode1

[hadoop@datanode1 ~]$ exit

logout

Connection to datanode1 closed.

[hadoop@datanode2 ~]$

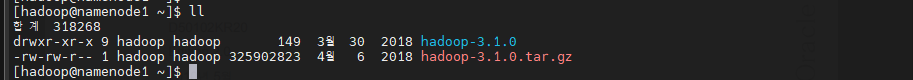

4. Hadoop 설치

※ namenode1 에서 진행합니다 ( namenode1으로 설치하여 각 노드에 배포하는 방식 )

※ hadoop 계정에서 진행합니다

• hadoop 다운로드

[hadoop@namenode1 ~]$ wget https://archive.apache.org/dist/hadoop/core/hadoop-3.1.0/hadoop-3.1.0.tar.gz[hadoop@namenode1 ~]$ wget https://archive.apache.org/dist/hadoop/core/hadoop-3.1.0/hadoop-3.1.0.tar.gz

--2023-09-05 09:11:51-- https://archive.apache.org/dist/hadoop/core/hadoop-3.1.0/hadoop-3.1.0.tar.gz

Resolving archive.apache.org (archive.apache.org)... 65.108.204.189, 2a01:4f9:1a:a084::2

Connecting to archive.apache.org (archive.apache.org)|65.108.204.189|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 325902823 (311M) [application/x-gzip]

Saving to: ‘hadoop-3.1.0.tar.gz’

100%[=====================================================================>] 325,902,823 813KB/s in 3m 52s

2023-09-05 09:15:45 (1.34 MB/s) - ‘hadoop-3.1.0.tar.gz’ saved [325902823/325902823]

• hadoop 압축 풀기

[hadoop@namenode1 ~]$ tar xvzf hadoop-3.1.0.tar.gz

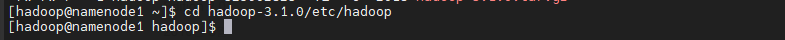

5. Hadoop 파일 설정

※ namenode1 에서 진행합니다 ( namenode1으로 설치하여 각 노드에 배포하는 방식 )

※ hadoop 계정에서 진행합니다

• hadoop 디렉토리로 이동

[hadoop@namenode1 ~]$ cd hadoop-3.1.0/etc/hadoop

• workers 파일 수정

※ slave로써 사용할 호스트명을 입력

※ 원래 있던 localhost는 주석( # ) 처리

[hadoop@namenode1 hadoop]$ vi workers

rmnode1

datanode1

datanode2

• core-site.xml 파일 수정

※ hdfs와 맵리듀스에서 사용할 공통적인 환경을 설정

※ <configuration> </configuration> 사이에 내용 입력

[hadoop@namenode1 hadoop]$ vi core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>namenode1:2181,rmnode1:2181,datanode1:2181</value>

</property><configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>namenode1:2181,rmnode1:2181,datanode1:2181</value>

</property>

</configuration>

• hdfs-site.xml 파일 수정

※ hdfs에서 사용할 환경을 설정

※ <configuration> </configuration> 사이에 내용 입력

[hadoop@namenode1 hadoop]$ vi hdfs-site.xml

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/dfs/namenode</value>

</property>

<property>

<name>dfs.datanode.name.dir</name>

<value>/dfs/datanode</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/dfs/journalnode</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>namenode1,rmnode1</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.namenode1</name>

<value>namenode1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.rmnode1</name>

<value>rmnode1:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.namenode1</name>

<value>namenode1:9870</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.rmnode1</name>

<value>rmnode1:9870</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://namenode1:8485;rmnode1:8485;datanode1:8485/mycluster</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property><configuration>

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/dfs/namenode</value>

</property>

<property>

<name>dfs.datanode.name.dir</name>

<value>/dfs/datanode</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/dfs/journalnode</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>namenode1,rmnode1</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.namenode1</name>

<value>namenode1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.rmnode1</name>

<value>rmnode1:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.namenode1</name>

<value>namenode1:9870</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.rmnode1</name>

<value>rmnode1:9870</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>